Since launching the Ask Luke feature on this website nearly two years ago, people have asked the system over 25,000 questions. But not all were getting answered even when they could have been. Enter... a custom re-ranker.

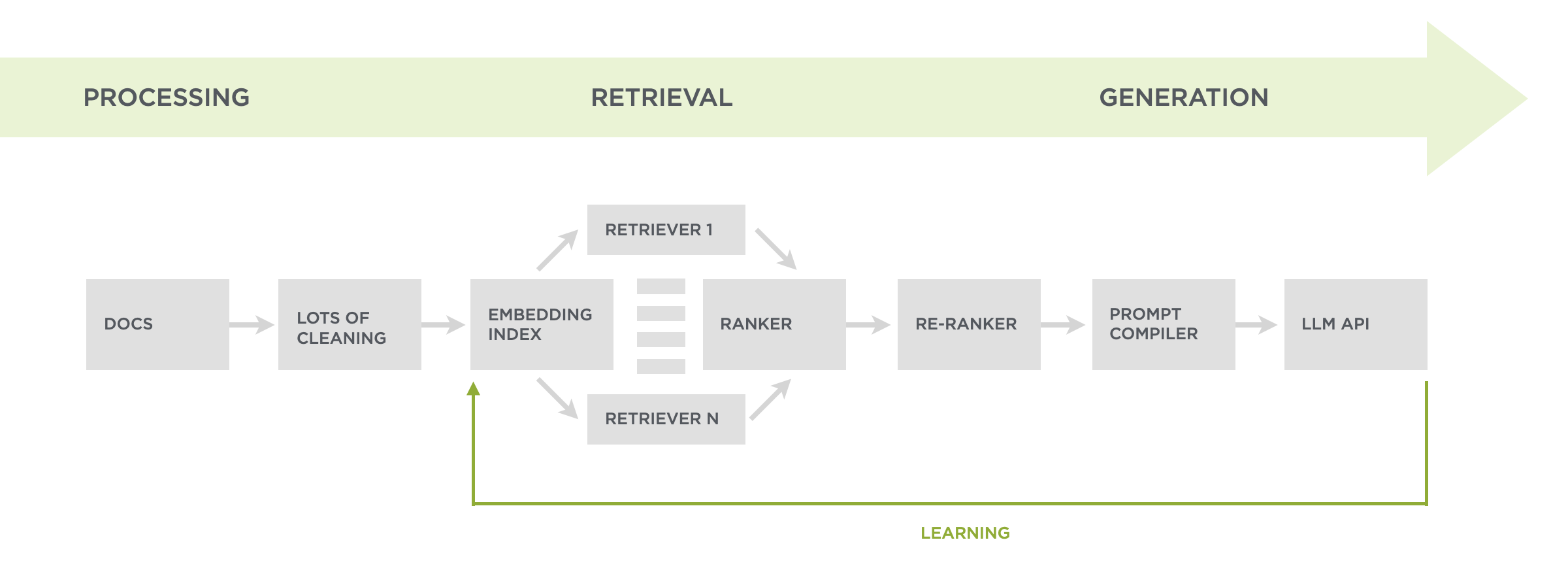

At a high-level, Ask Luke makes use of the thousands or articles, hundreds of presentations, and more I've authored over the years to answer people's questions about digital product design. To do so, we first process and clean-up all these files so we can retrieve the relevant parts of them when someone asks a question. After retrieval, those results are packaged up for Large Language Models to utilize when generating a reply.

To find the parts of all these documents that can best answer any given question, we do both an embedding search (in vector space) and a keyword search. This combination of retrieval techniques ensures we're finding content that talks about related topics and specifically matches unique terms. Keyword search was a later addition after we saw that embeddings, which are great at semantic search, could miss needles in the haystack. For example, a concept like PID.

The results of both these searches get diversified to make sure we're not just repeating the same content. For example, I've given the same talk at different events so no need to use two versions. What's left of our search results is then filtered by a relevance score. If it meets the threshold, we include it in our instructions for whatever Large Language Model is being used for generation. Usually we fill up an LLM's context window with about ten results.

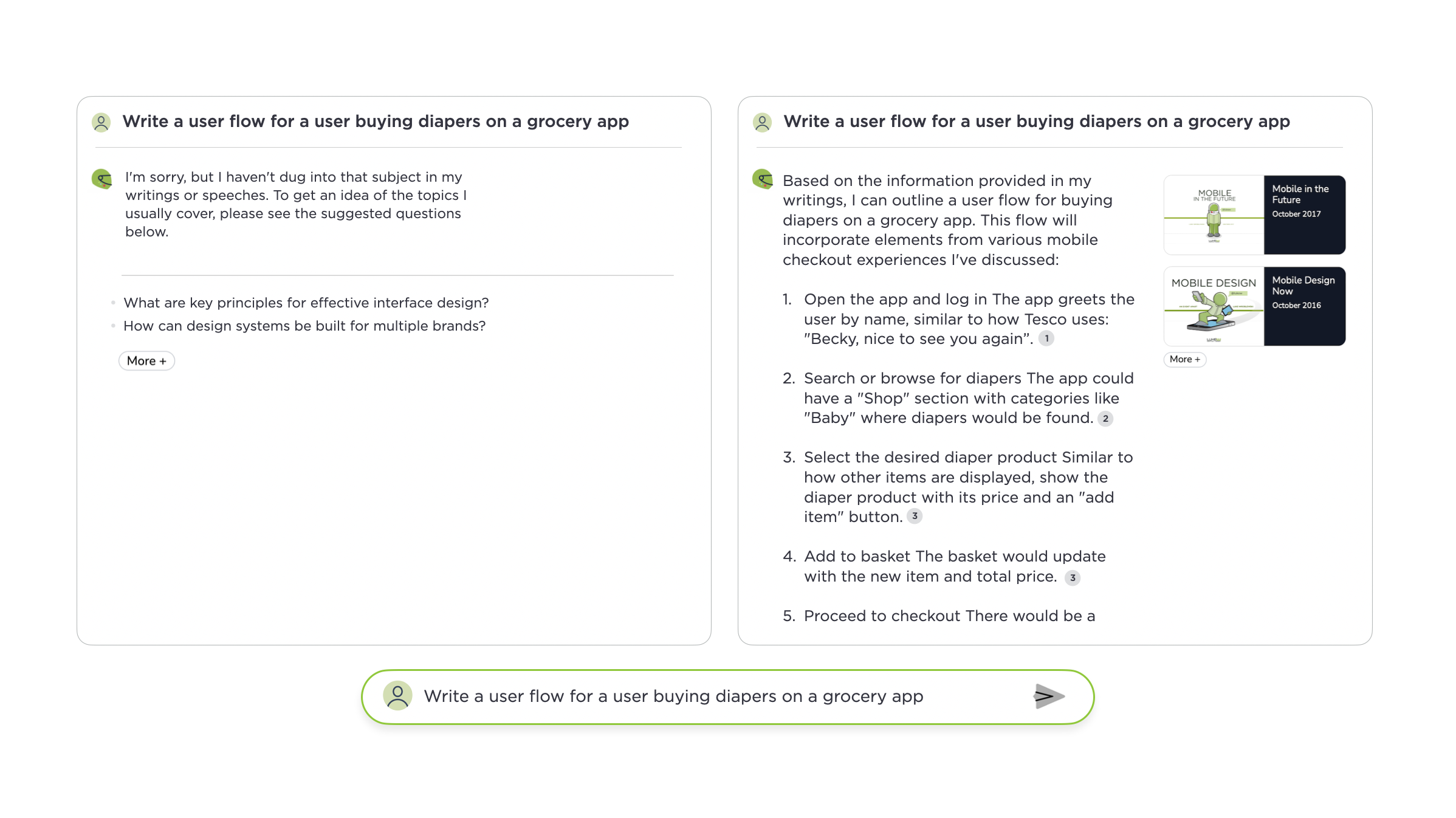

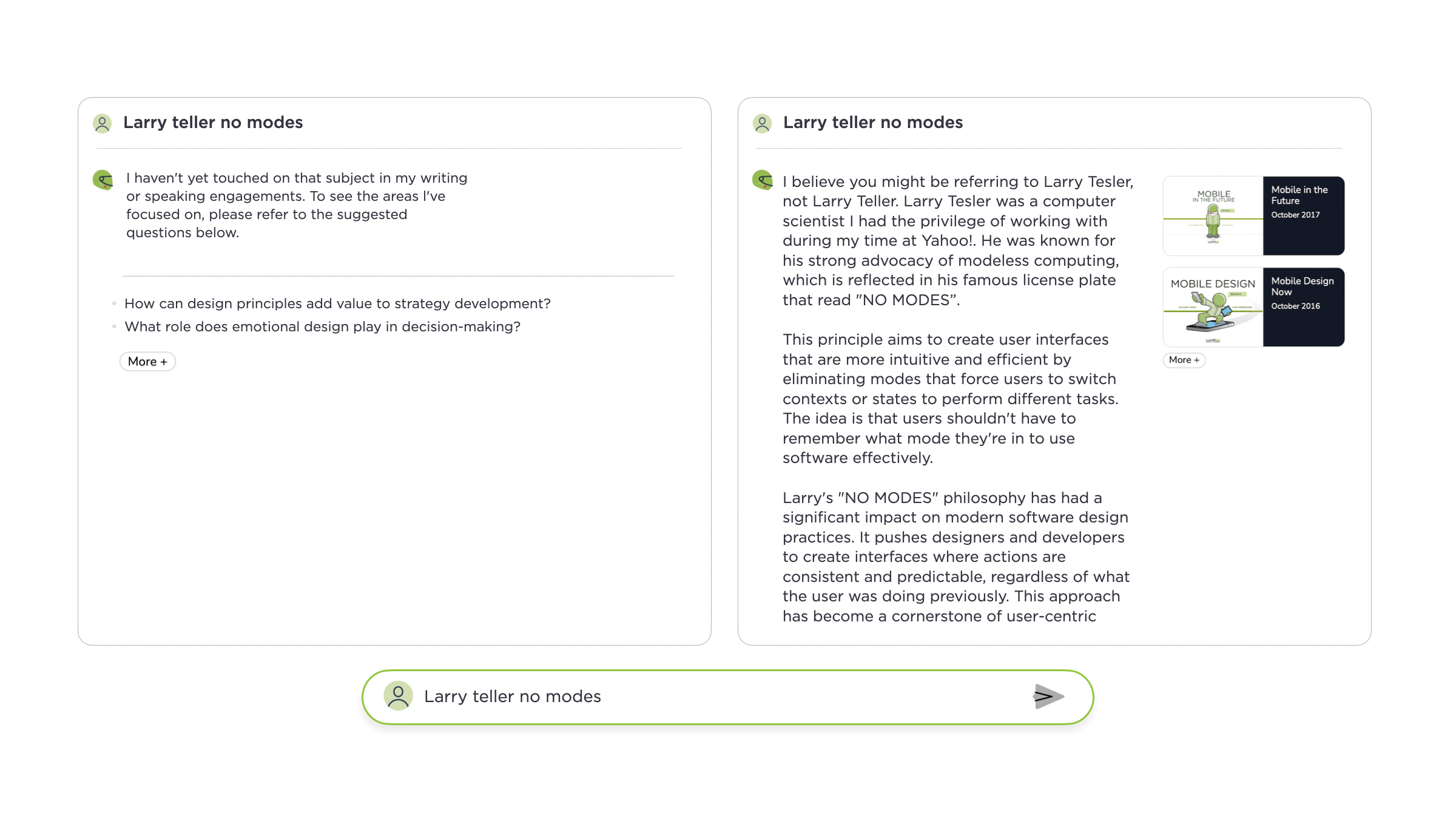

While these retrieval techniques work to answer most people's questions, they sometimes miss out on useful but not directly relevant content. So why not just lower the threshold to make use of more content when responding? We tried but irrelevant content would regularly pollute answers. After some experimentation, a custom re-ranker helped the most to expand coverage while maintaining quality. Questions that were not answered before now had useful replies as the images above and below illustrate.

What does the re-ranker do? If we don't have ten results that meet our relevance threshold. We take any results that meet a lower threshold and send them (in parallel) to a fast AI model (like Gemini Flash 2.0) that evaluates how well each could answer the question. Any results deemed useful are then used to backfill the instructions for content generation resulting in a wider set of questions we can answer well.

Further Reading

Additional articles about what I've tried and learned by rethinking the design and development of my Website using large-scale AI models.

- New Ways into Web Content: rethinking how to design software with AI

- Integrated Audio Experiences & Memory: enabling specific content experiences

- Expanding Conversational User Interfaces: extending chat user interfaces

- Integrated Video Experiences: adding video experiences to conversational UI

- Integrated PDF Experiences: unique considerations when adding PDF experiences

- Dynamic Preview Cards: improving how generated answers are shared

- Text Generation Differences: testing the impact of AI new models

- PDF Parsing with Vision Models: using AI vision models to extract PDF contents

- Streaming Citations: citing relevant articles, videos, PDFs, etc. in real-time

- Streaming Inline Images: indexing & displaying relevant images in answers

- Custom Re-ranker: improving content retrieval to answer more questions

Acknowledgments

Big thanks to Kian Sutarwala and Alex Peysakhovich for the development and AI research help.