Like many technology nerds out there, I got to explore Apple's new Vision Pro headset and Spatial Computing operating system first hand this weekend. Instead of a product review (there's plenty out there already), here's my initial thoughts on the platform interactions and user interface potential. So basically a UI nerd's take on things.

Apple's vision of Spatial Computing essentially has two modes: an infinite canvas of windows and their corresponding apps which can be placed and interacted with anywhere within your surroundings and an immersive mode that replaces your physical surroundings with a fully digital environment. Basically a more Augmented Reality (AR)-ish mode and a more Virtual Reality (VR)-ish mode.

Whether viewing a panoramic photo, exploring an environment, or watching videos in cinematic mode, the ability to fully enter a virtual space is really well done. Experiences made for this format are the top of my list to try out. It's where things are possible that make use of and alter the entirety of space around you. That said, the apps and content that make use of this capability are few and far between right now.

But I expect lots of experimentation and some truly spatial computing first interactions to emerge. Kind of like the way Angry Birds fully embraced multi-touch on the iPad and created a unique form of gameplay as a result.

While definitely being used to render the spatial OS, the deep understanding Apple Vision Pro's camera and sensor system has of your environment feels under-utilized by apps so far. I say this without a deep understanding of the APIs available to developers but when I see examples of the data Apple Vision Pro has available (video below), it feels like more is possible.

So that's certainly compelling but what about the app environment, infinite windows, and getting things done in the AR-ish side of Spatial OS? With this I worry Apple's ecosystem might be holding them back vs. moving them forward. The popular consensus is that having a deep catalog of apps and developers is a huge advantage for a new platform. And Apple's design team has made it clear that they're leaning into this existing model as a way to transition users toward something new.

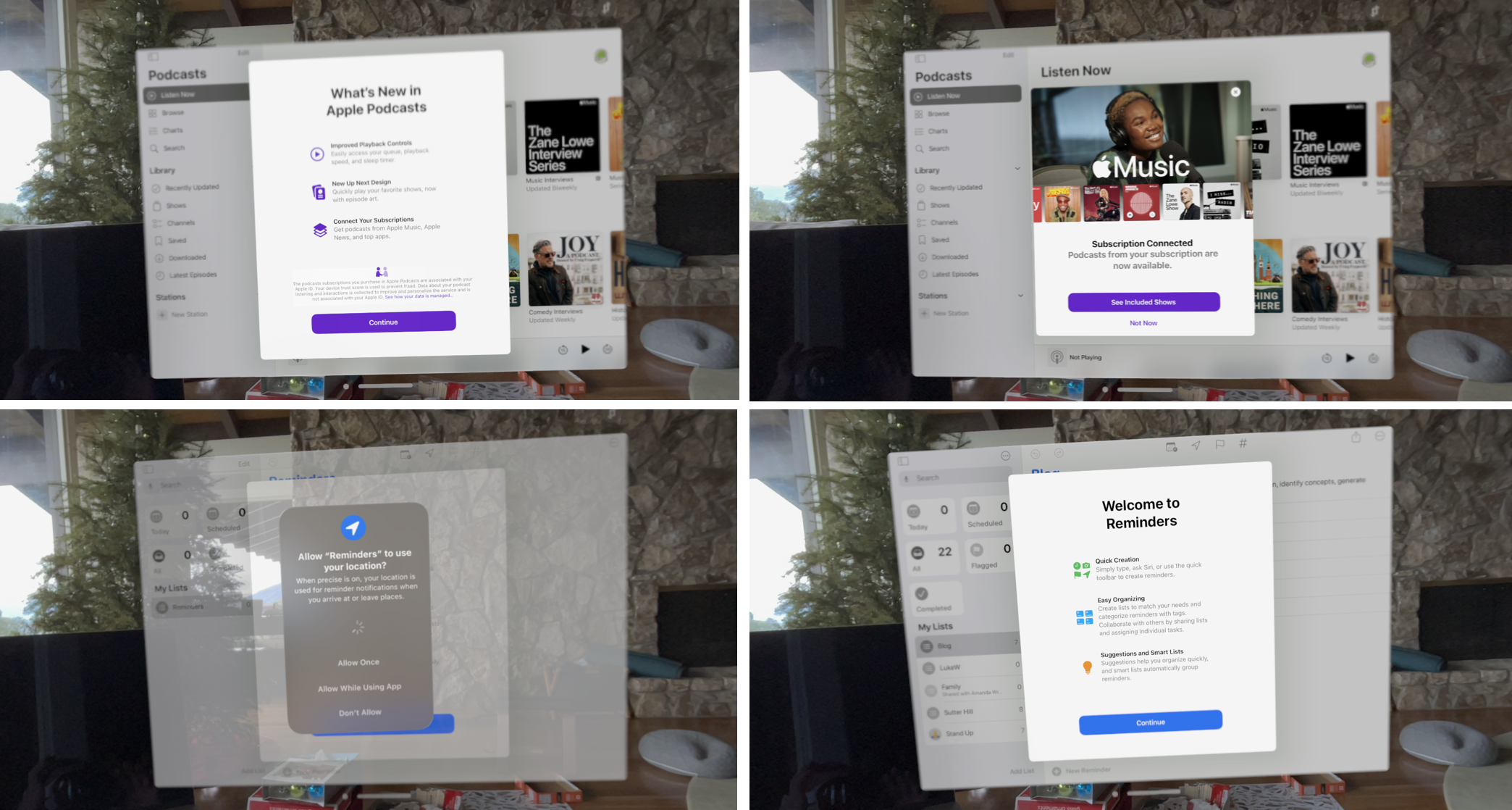

But that also means all the bad stuff comes along with the good. At a micro level, I found it very incongruent with the future of computing to face a barrage of pop-up modal windows during device setup and every time I accessed a new app. I know these are consistent patterns on MacOS, iOS, and iPadOS but that's the point: do they belong in a spatial OS? And frankly given their prominence, frequency, and annoyance... do they belong in any OS?

Similarly, retaining the WIMP paradigm (windows, icons, menus, and pointers) might help bridge the gap for people familiar with iPhones and Macs but making this work with the Vision Pro's eye tracking and hand gestures, while technically very impressive, created a bunch of frustration for me. It's easily the best eye and hand tracking I've experienced but I still ended up making a bunch of mistakes with unintended consequences. Yes, I'm going to re-calibrate to see if it fixes things but my broader points stands.

Is Apple now locked in a habit of porting their ecosystem from screen to screen to screen? And, as a result, tethered to too many constraints, requirements, and paradigms about what an app is and we should interact with it? Were they burned by skeuomorphic design and no longer want to push the user interface in non-conventional ways?

One approach might be to look outside of WIMP and lean more into a model like OCGM (objects, containers, gestures, manipulations) designed for natural user interfaces (NUI). Another is starting simple, from the ground up. As a counter example to Apple Vision Pro, consider Meta's Ray Ban glasses. They are light, simple, and relatively cheap. For input there's an ultra-wide 12 MP camera and a five-microphone array. The only user interface is your voice and a single hardware button.

When combined with vision and language AI models, this simple set of controls offers up a different way of interacting with reality than the Apple Vision Pro. One without an existing app ecosystem, without windows, menus, and icons. But potentially one with a new of bringing computing capabilities to the real World around us.

Which direction this all goes... we'll see. But it's great to have these two distinct visions for bringing compute capabilities to our eyes.