Hang around software companies long enough and you'll certainly hear someone proclaim "the best interface is invisible." While this adage seems inevitable, today's device ecosystem makes it clear we may not be there yet.

When there's no graphical user interface (icons, labels, etc.) in a product to guide us, our memory becomes the UI. That is, we need to remember the hidden voice and gesture commands that operate our devices. And these controls are likely to differ per device making the task even harder.

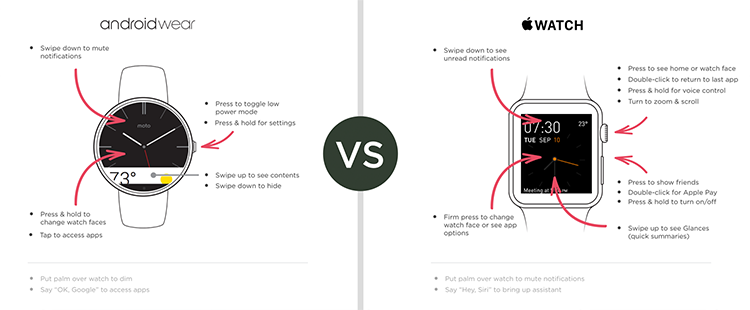

Consider the number of gesture interfaces on today's smartwatch home screens. A swipe in any direction, a tap, or a long press each trigger different commands. Even after months of use, I still find myself forgetting about hidden gestures in these UIs. Perhaps Apple's 80+ page guide to their watch is telling, it takes a lot of learning to operate a hidden interface.

So how can we make hidden interfaces more usable? Enforce more consistency, align with natural actions (video below), allow natural speech, include clear cues, and ....?