We've all heard the adage the "the future is here it just isn't evenly distributed yet. With rapid advancement in multi-modal AI models and headset computing, we're at a stage where the future is clear but is isn't implemented (yet). Here's two examples:

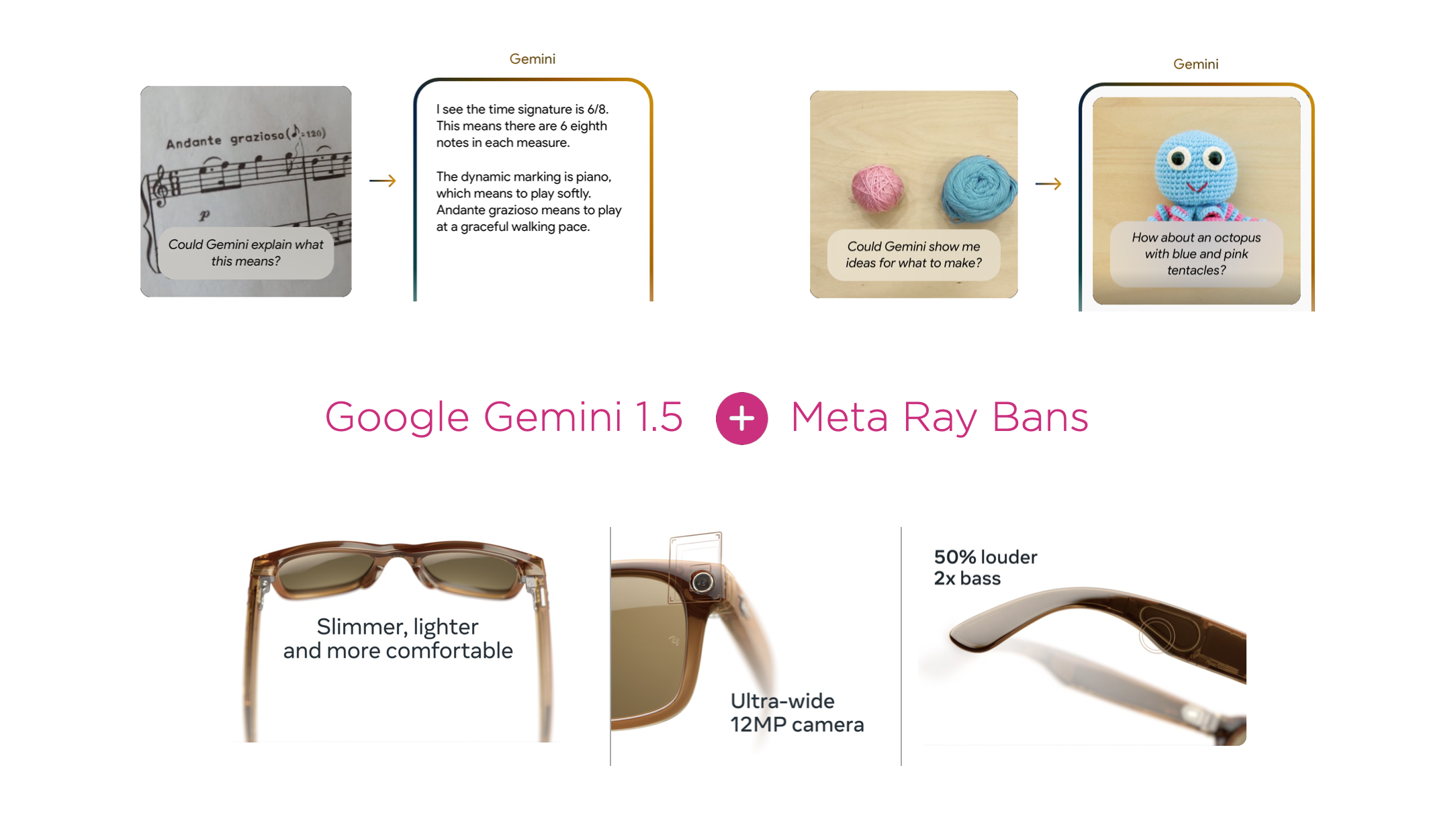

Multi-modal AI models can take videos, images, audio, and text as input and apply them as context to provide relevant responses and actions. Coupled with a lightweight headset with a camera, microphone, and speakers, this provides people with new ways to understand and interact with the World around them.

While these capabilities both exist, large AI models can't run locally on glasses ...yet. But the speed and cost of running models keeps decreasing while their abilities keep increasing.

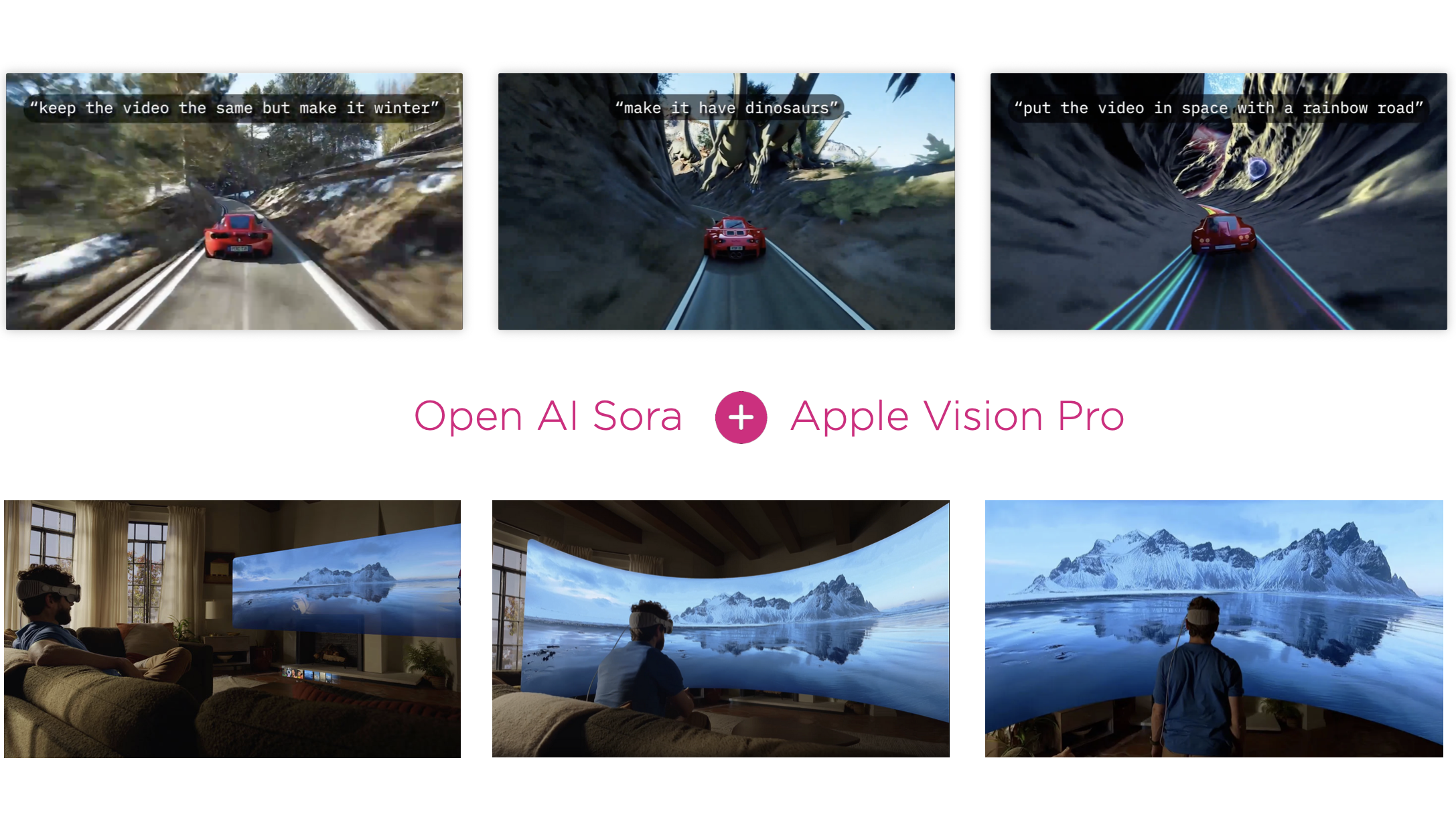

Video generation models can not only go from text to video, image to video, but also video to video. This enables people to modify what they're watching on the fly. Coupled with immersive video on a spatial computing platform, this enables dynamic environments, entertainment, and more.

Again these capabilities exist seperately but the kind of instant immersive (high resolution) video generations needed for Apple's Vision Pro format isn't here... yet.