In his AI Speaker Series presentation at Sutter Hill Ventures, Joon Park discussed his work on generated AI agents, their architecture, and what we might learn form them about human behavior. Here's my notes from his talk:

- Can we create computer generated behavior that simulates human behavior in a compelling way? While this has been very complicated to date, LLMs offer us a new way to tackle the problem.

- The way we behave and communicate is much too vast and too complex for us to be able to create with existing methods.

- Large language models (LLMs) are trained on broad data that reflects our lives, like the traces on our social web, Wikipedia, and more. So these models include a tremendous amount about us, how we live, talk, and behave.

- With the right method, LLMs can be transformed into the core ingredient that has been missing in the past that will enable us to simulate human behavior.

- Generative agents are a new way to simulate human behavior using LLMs. They are complemented with an agent architecture that remembers, reflects, and plans based on constantly growing memories and cascading social dynamics.

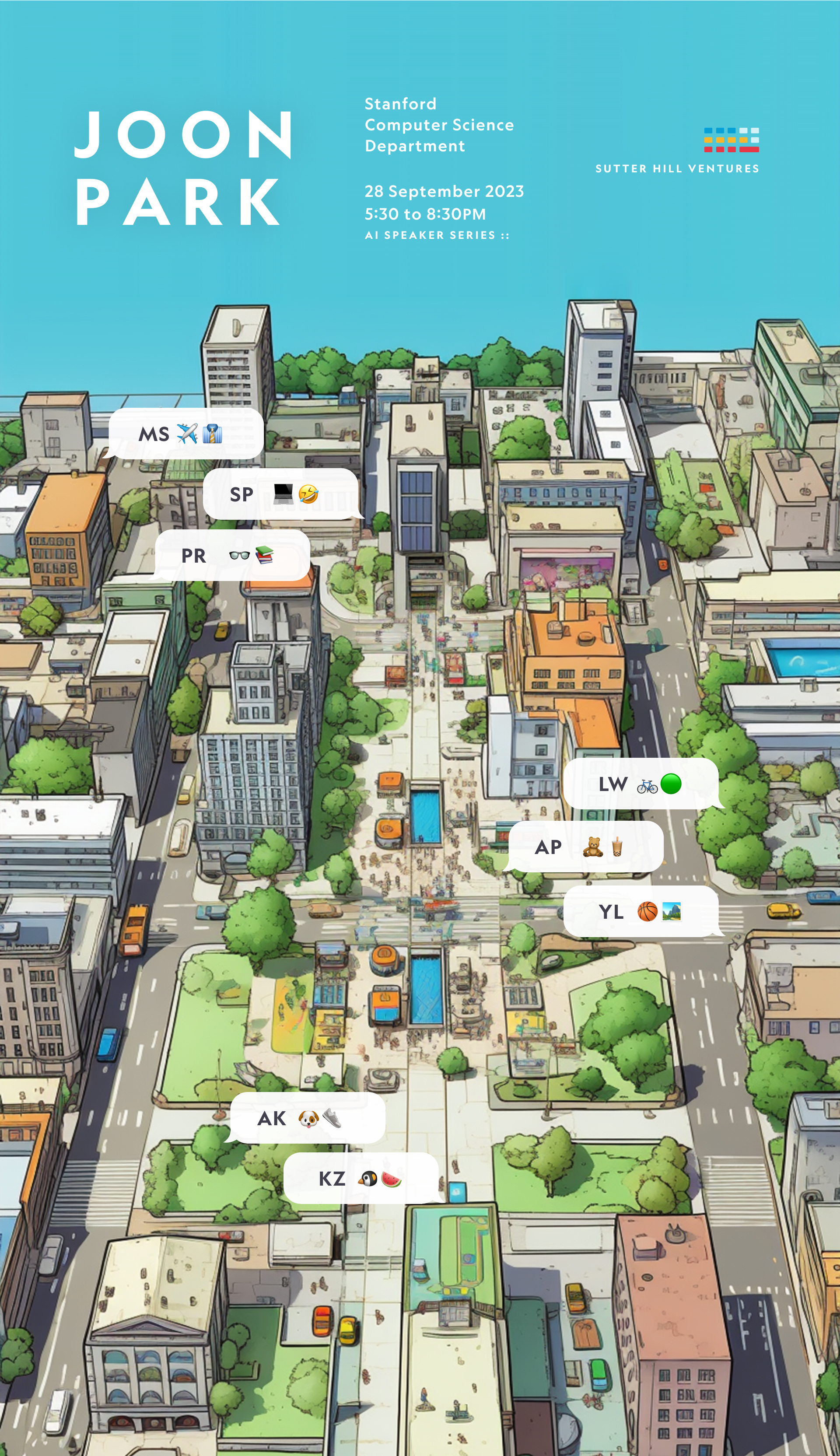

Smallville Simulation

- Smallville is a custom-built game world which simulates a small village. 25 generative agents are initiated with a paragraph description of their personality and motivations. No other information is provided to them.

- As individuals, agents set plans, and execute on them. They wake up in the morning, do their routines, and go to work in the sandbox game environment.

- First, an agent basically generates a natural language statement describing their current action. They then translate this into concrete grounded movements that can affect the sandbox game environment.

- They actually influence the state of the objects that are in this world. So a refrigerator can be empty when the agent uses a table to make breakfast.

- They determine whether they want to engage in conversations when they see another agent. And they generate the actual dialogue if they decide to engage.

- Just how agents can form dialogue with each other, a user can engage in a dialogue with these agents by specifying a persona. For instance, a news reporter.

- Users can also alter the state of the agent's environment, control an agent, or actually enter as an outside visitor.

- In this simulation, information diffuses across the community as agents share information with each other and form new relationships.

Agent Architecture

- In the center of the architecture that powers generative agents is a memory stream that maintains a record of agents' experiences in natural language.

- From the memory stream, records are retrieved as relevant to the agents' cognitive processes. A retrieval function that takes the agent's current situation as input and returns a subset of the memory stream to pass to a LLM, which then generates the final output behavior of the agents.

- Retrieval is a linear combination of the recency, importance, and relevance function for each piece of memory.

- The importance function is a prompt that asks the large-range model for the event status. You're basically asking the agent in natural language, this is who you are. How important is this to you?

- The relevance function clusters records of agents' memory into higher-level abstract thoughts that are called reflections. Once they are synthesized, these reflections are just a type of memory and are just stored in the memory stream along with other raw observational memories.

- Over time, this generates trees of reflections and the leaf nodes are basically the observations. As you go higher-level up the tree, you're starting to answer some of the core questions about who agents are, what drives them, what does they like.

- While we can generate plausible behavior in response to situations, this might sacrifice the quality for long-term actions. Agents need to plan over a longer time horizon than just now.

- Plans describe a future sequence of actions for the agent and help keep the agent's behavior consistent over time and are generated by a prompt that summarizes the agent and the agent's current status.

- In order to control for granularity, plans are generated in large chunks to hourly to 1-15 minute increments.

Evaluating Agents

- How do we evaluate them if agents remember, plan, and reflect in a believable manner?

- Ask the agents a series of questions and human investigators to rank the answers in order to calculate true skill ratings for each condition

- Found that the core components of our agent architecture, observation, plan, and reflection, each contribute critically to the believability of these agents.

- But agents would sometimes fail to retrieve certain memories and sometimes embellish their memory (with hallucinations).

- And instruction tuning of LLMs also influenced how agents spoke to each other (overly formal or polite).

- Going forward, the promise of generative agents is that we can actually create accurately simulation human behaviors.